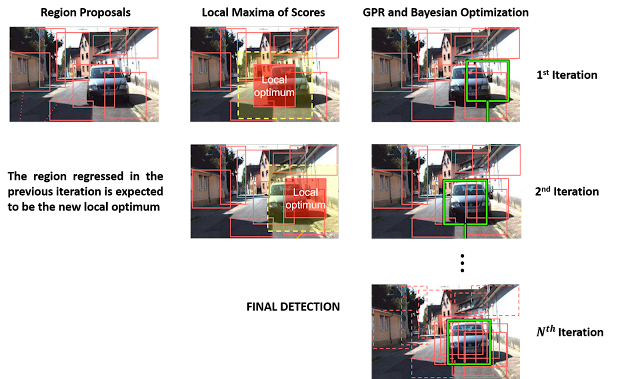

Object detection by sliding window is now a relic of the past. Modern approaches to object detection such as R-CNN rely on simply scoring region proposals using a CNN. This approach is faster as it requires scoring a few hundred bounding boxes as compared to an exhaustive search over the entire image. However, such an object detector can only be as good as the region proposal mechanism under the hood. Zhang et.al present a novel approach that overcomes this challenge by using the scores evaluated at these region proposals to iteratively regress to better locations of the object using Bayesian Inference and Gaussian Process Regression (GPR). I will be making simplifying assumptions to bring out the main idea. The actual implementation can be looked up in their publication.

|

Approach of Zhang et.al in "Improving Object Detection with CNN via Bayesian Optimization and Structured Prediction" |

Gaussian Process Regression

Gaussian Process refers to a set of random variables such that any subset of them follows a multivariate gaussian distribution. In case of object detection these random variables happen to be the scores of different regions in the image frame, one random variable per region. The scores $S_y$ we observe for a region $y$ also has some noise $\epsilon_y$ mixed with it

S_y = f(y) + \epsilon_y

\end{equation}

where $\epsilon_y \sim \mathcal{N}(0,\beta^{-1})$. In a usual regression setting we would like to estimate the parameters of the $f(\cdot)$ given a set of observations so that given a new $y'$, we can compute the its score. In GPR instead of directly specifying a parametric form for $f$, we provide a prior over the set of all possible functions

f \sim P(f|\theta)

\end{equation}

Note that here a function is being interpreted as an infinite dimensional vector where each element of the vector specifies the function's value at a particular point in it's domain. However, we are only dealing with a finite samples of the distribution, namely the observed data. As the name suggests in GPR the prior is a gaussian process

(f(y_1),\cdots,f(y_N)) \sim \mathcal{N}(y_1,\cdots,y_N|\mathbf{m}_0,\mathbf{K}_0)

\end{equation}

where $m_0$ is the mean and $K_0$ is a Gram matrix defined using a kernel $k(y_i,y_j)$. The kernel that is used in the paper is squared exponential covariance kernel with automatic relevance determination which looks like this

k(y_i,y_j) = \eta \exp(-(y_i-y_j)^T \Lambda (y_i-y_j))

\end{equation}

But we are interested in the distribution of $\mathbf{S}=\{S_1,\cdots,S_N\}$, which using bayes rule and marginalizing over $\mathbf{f}={f(y_1),\cdots,f(y_N)}$ can be written as

P(\mathbf{S}) = \int P(\mathbf{S}|\mathbf{f})P(\mathbf{f}) d\mathbf{f}

\end{equation}

To compute this we shall use the following result from Section 2.3.3 of "Pattern Recognition and Machine Learning by Christopher M. Bishop" which says that if

\begin{align}

P(x) &= \mathcal{N}(x|\mu,\Sigma)\\

P(y|x) &= \mathcal{N}(y|Ax+b,\Lambda) \\

\end{align}

then $P(y) = \mathcal{N}(y|A\mu + b, \Lambda + A\Sigma A^T)$. In our case

\begin{align}

P(\mathbf{f}) &= \mathcal{N}(\mathbf{f}|\mathbf{m}_0,\mathbf{K}_0) \\

P(\mathbf{S}|\mathbf{f}) &= \mathcal{N}(\mathbf{S}|\mathbf{f},\beta^{-1}\mathbf{I}_N)

\end{align}

P(x) &= \mathcal{N}(x|\mu,\Sigma)\\

P(y|x) &= \mathcal{N}(y|Ax+b,\Lambda) \\

\end{align}

then $P(y) = \mathcal{N}(y|A\mu + b, \Lambda + A\Sigma A^T)$. In our case

P(\mathbf{f}) &= \mathcal{N}(\mathbf{f}|\mathbf{m}_0,\mathbf{K}_0) \\

P(\mathbf{S}|\mathbf{f}) &= \mathcal{N}(\mathbf{S}|\mathbf{f},\beta^{-1}\mathbf{I}_N)

\end{align}

which implies that $P(\mathbf{S}) = \mathcal{N}(\mathbf{m}_0,\mathbf{K}_N)$ where $\mathbf{K}_N = \mathbf{K}_0 + \beta^{-1}\mathbf{I}_N$. Furthermore the mean used in the paper is a constant for every dimension that is $\mathbf{m}_0 = m_0 \mathbf{1}_N$

Now let us consider a new region $y_{N+1}$ for which we want to compute a distribution over its score $S_{y_{N+1}}$. In order to get this we shall first compute the joint distribution of $\mathbf{S}_{N+1} = \{\mathbf{S},S_{y_{N+1}}\}$ which by straightforward extension is given by

\begin{equation}

P(\mathbf{S}_{N+1}) = \mathcal{N}(\mathbf{m}_0,\mathbf{K}_{N+1})

\end{equation}

where $\mathbf{K}_{N+1}$ can be written in terms of $\mathbf{K}_N$ as

\begin{equation}

\mathbf{K}_{N+1} =

\left[

\begin{array}{cc}

\mathbf{K}_N & \mathbf{k}_{N+1}\\

\mathbf{k}_{N+1}^T & k_{N+1}

\end{array}

\right]

\end{equation}

where

\begin{align}

\mathbf{k}_{N+1} &= [k(y_{N+1},y_1) \cdots, k(y_{N+1},y_N)]^T \\

k_{N+1} &= k(y_{N+1},y_{N+1}) + \beta^{-1}

\end{align}

Finally we marginalize to get the conditional probability of $S_{y_{N+1}}$ given all the previous scores $\mathbf{S}$. For this we refer to the following property of Gaussian distributed random variables from Section 2.3.1 of Bishop's book - Let $x_a,x_b$ partition the variables distributed according to a multivariate Gaussian distribution with a corresponding partitioning of the mean and covariance matrix as

\mathbf{\mu} &=

\left[

\begin{array}{c}

\mathbf{\mu}_{a}\\

\mathbf{\mu}_{b}

\end{array}

\right] \\

\mathbf{\Sigma} &=

\left[

\begin{array}{cc}

\mathbf{\Sigma}_{aa} & \mathbf{\Sigma}_{ab} \\

\mathbf{\Sigma}_{ba} & \mathbf{\Sigma}_{bb}

\end{array}

\right]

\end{align}

then

P(\mathbf{x}_b|\mathbf{x}_a) = \mathcal{N}(\mathbf{x}_b|\mathbf{\mu}_b+\mathbf{\Sigma}_{ba} \mathbf{\Sigma}_{aa}^{-1} (\mathbf{x}_a-\mathbf{\mu}_a), \: \mathbf{\Sigma}_{bb} - \mathbf{\Sigma}_{ba}\mathbf{\Sigma}_{aa}^{-1}\mathbf{\Sigma}_{ab})

\end{equation}

Substituting the terms we get

P(S_{y_{N+1}}|\mathbf{S}) = \mathcal{N}(S_{y_{N+1}}|\mu,\sigma)

\end{align}

where $\mu = m_0 + \mathbf{k}_N^T\mathbf{K}_N^{-1}[S_{y_j}-m_0]_{j=1,\cdots,N}$ and $\sigma = k_{N+1}-\mathbf{k}_{N+1}^T\mathbf{K}_N^{-1}\mathbf{k}_{N+1}$. This is the primary result of GPR - It gives a distribution of scores at any new location given the scores at all previously locations.

Fine grained search using Bayesian Optimization

Ideally we would like to pick a new location $y_{N+1}$ that would lead to maximum improvement in the score over the best scoring location observed so far. However, since we only have a distribution of score at any given location, we shall pick the next location that maximizes the expected improvement in score which is given by

a_{EI}(y_{N+1}|\mathbf{S}) = \int_{\hat{S}_N}^{\infty} (S_{y_{N+1}}-\hat{S}_N).P(S_{y_{N+1}}|\mathbf{S})dS_{y_{N+1}}

\end{align}

where $\hat{S}_N = \max_{j=1,\cdots,N}S_{y_j}$. The paper provides a closed form solution of this acquisition function in terms of $\mu,\sigma$ and cumulative distribution of normal distribution. Please refer to equation 6 in the paper for the exact expression. In practice, instead of analytically maximizing $a_{EI}$, it is used to score the remaining region proposals.

In summary the steps are:

- Region Proposal: Propose regions using selective search or similar techniques

- Pruning: Discard proposals with low classification scores and select some top ranked regions

- Find local maxima where each such location would correspond to multiple instances

- For each local maxima select $N$ regions in its vicinity whose scores correspond to $\mathbf{S}$

- Fit a GP model using these sample scores

- Select one of the remaining regions using $a_{EI}$ as the scoring criterion

- Repeat until there are no more acceptable proposals

Experimental result

The above graph shows a detection performance comparison in terms of mean average precision (mAP) amongst variants of Selective Search (SS) and the proposed approach (SS+FTS) for an oracle scoring function on PASCAL VOC 2007 detection benchmark. An oracle scoring function is the one that gives us the true score which in case of object detection means that it always gives a higher score to a better localization. Bounding box intersection over union (IOU) was used as the oracle scorer. SS + Local random search follows steps 1 through 3 but then randomly samples bounding boxes in the vicinity of the detected local maxima. On the horizontal axis is the increasingly strict criterion (IOU with ground truth region) for declaring a predicted region as a correct detection.

Clearly, for lower IOU thresholds all methods perform quite well meaning that Selective Search quite often predicts a detection region somewhere in the vicinity of the ground truth. However as we demand more accurate localizations, most methods drop down to mAP of 30% except the proposed approach which maintains a performance of 95%. It is no surprise that if one increases the number of region proposals by SS from 2000 to 10000 one would do better and hence an mAP of 50% for SS quality. But what is surprising is that SS+FTS with just 2100 boxes is able to beat a region proposal driven detection approach by a margin of 45% for IOU of 0.9.

Mean Average Precision (mAP)

A little about the metric itself. It is important to understand metrics in order to understand how algorithms compare to each other. A good metric should reflect the expectations from the algorithm. For detection we expect two things from our detector:

- If there is an object it should detect it which is quantified by Recall

- If it believes there is an object at a location then there actually should be an object there which is quantified by Precision

Note that achieving one does not necessarily mean achieving other. For example a detector that says there is an object at every location has perfect recall but extremely low precision. Similarly a detector that always says there is no object at any location has perfect Precision but 0 Recall. Hence there is a sweet spot to be identified.

So now assume our algorithm produces a list of detection regions along with corresponding confidence scores for each image. These detections are ranked in order of decreasing confidence across all images. Each detection is then marked as a true positive (TP) or a false positive (FP) by assigning it to the closest ground truth and comparing the IOU with a threshold (x axis of the above plot). For each position, $k$ in the ranked list one could compute precision and recall at top $k$ as $\frac{\#TP}{k}$ and $\frac{\#TP}{\#P}$ respectively. Note that recall is monotonically increasing with $k$ but precision might increase or decrease. So to avoid the wiggle, we interpolate the value as follows

p_{interp}(r) = \max_{\tilde{r} \geq r} p(\tilde{r})

\]

where $p(\tilde{r})$ is precision at recall $\tilde{r}$. Note that we have discrete measurements for $\tilde{r} = r(k)$ for some $k$. Now a summarization of the precision recall curve is average precision defined as follows

AP = \sum_{r\in{0,0.1,\cdots,1}} p_{interp}(r)

\]

Finally since detectors for multiple contending classes are evaluated simultaneously AP for different classes are averaged together and reported as mAP.

Conclusion

Poor recall from region proposal algorithms can directly hamper the performance of even the best of detection scoring functions. Gaussian Process Regression along with Bayesian optimization seems to be a very good strategy for efficiently overcoming this issue while keeping the computational advantage of dealing with a sparse set of locations instead of an exhaustive search.